Final Year Project

Comparing Pathfinding AI with Reinforcement Learning for Autonomous Vehicles During Simple Point to Point Navigation Under the Influence of Dynamic Variables

My final year project was done with the Virtual Engineering Centre where I had previously completed a Summer Internship. This project used the plugin called AirSim alongside Stablebaselines3 which contained reinforcement algorithms as well as a drone model to use in Unreal Engine 4. The Reinforcement learning algorithm was completed in Visual Studio Code with Python as the language . The other Algorithm was completed using a plugin which was created in Unreal Engine 4 using blueprints where minor adjustments were made. This came with a point-to-point navigation system which allowed translation across all three axes.

Reinforcement Learning

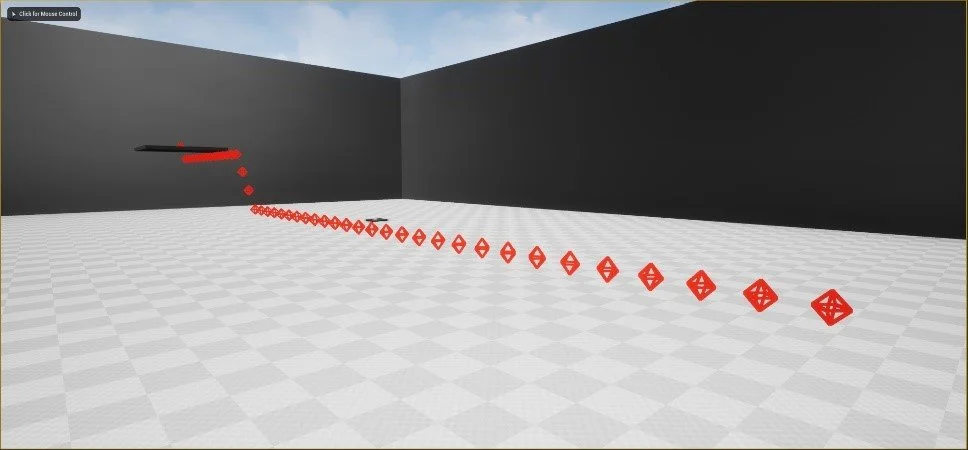

The images above show the Reinforcement Learning Algorithm learning to go from point A to Point B in a map with no dynamic objects in. The point of this was to teach the algorithm what its main goal was using a reward system before implementing dynamic variables as it retains all its data so that the trained model could be implemented. Furthermore, the platform was a non-collidable object and was there to show the user where the end point was. The left image shows the start of the process where the drone was doing random actions whereas the image on the right shows the drone consistently doing the same actions as it is achieving a high reward each time.

The reward system works by using a distance factor where the closer the agent (drone) gets to its end point the higher the reward it would receive. This is shown in the videos above on the left side where it shows the current distance from the end point and the reward varies depending on that distance. Also, a distance limit was implemented so that the drone could learn faster. This was by giving the agent a very bad reward if it went too far or crashed into any objects. The drone also had a camera display on the front so that it could learn when it crashed by determining the object it collided with the negative reward it gained.

Pathfinding AI

The Pathfinding AI used was the A* algorithm and was much less complex, and time consuming than Reinforcement Learning. Furthermore, the AI pathfinding algorithm was able to complete the level where dynamic variables were at use, and which resulted in it to be the superior algorithm when doing simple point-to-point navigation. The reason for it being less time consuming was due to it not having to be trained unlike Reinforcement Learning and also that you could simply assign an end point in which the drone would travel to.

These videos above show both algorithms attempting a level with dynamic variables where the AI Pathfinding algorithm was able to complete it with a few collisions, but the Reinforcement Learning algorithm was not.